Fictional Scenario

I will be using the fictitious example at a hotel firm the current hotel customer management system is built on a J2EE application container with EJBs. The modernization is being driven the J2EE container is no longer supported by the vendor and the the team wants to move to a progressive web application powered by APIs. The hotel has been using Mulesoft as the API management engine another for business area with pretty good success (APIs and Batch Mulesoft flows in production) and the hotel IT strategy is one of API first. I will focus the discussion around one screen to manage the customer data (name, address, phone number, email, etc...) and how Mulesoft and SparkJava compare on the API front.What is SparkJava?

SparkJava bills it self as "a simple and expressive Java/Kotlin web framework DSL built for rapid development". My personal background for SparkJava was in researching it for this blog and for a review at my work. The one thing that jumped out at me was how super easy it is to create a routing for an endpoint as shown from their site:1 2 3 4 5 6 7 | import static spark.Spark.*; public class HelloWorld { public static void main(String[] args) { get("/hello", (req, res) -> "Hello World"); } } |

If you are not familiar with SparkJava web framework there site is a good place to start - http://sparkjava.com/ to learn more.

What is Mulesoft?

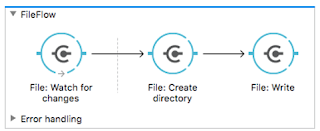

Mulesoft bills it self as "MuleSoft provides the most widely used integration platform (Mule ESB & CloudHub) for connecting SaaS & enterprise applications in the cloud and on-premise". I have been working with Mulesoft for around 18 months any the Anypoint Platform has top notch tools for API management that includes the API Manager, Design Center/Anypoint Studio and Exchange.The API manager provides your the manage security policies, monitor APIs and set API alerts. The Design Center & Anypoint Studio provide an online and downloadable IDE to develop APIs. The Exchange contains your API listing for self service of existing APIs and allows for testing against mock APIs based on RAML only definitions.

More information about Mulesoft - https://www.mulesoft.com/

Mulesoft compared with SparkJava for APIs

API Security

My personal preference is that APIs should at a minimum have basic security with a client id and client secret to limit and track users of the API. API managers can also provide additional security functions such as IP filters, OAuth 2.0 integration, throttling, etc... that will support you on your use cases needs.

Mulesoft:

Mulesoft's Anypoint API Manager provides you the ability to provision security policies on the fly or define your security prior to deployment that includes client id/secret, IP black/white listing, throttling, JSON/XML threat protection, OAuth 2.0, etc... Here is a really nice write up of Mulesoft features with some in depth details - https://blogs.mulesoft.com/dev/api-dev/secure-api/

SparkJava:

SparkJava is meant to be a lean web framework and doesn't support API security without the need for custom code or additional libraries for security..

API Security Conclusion

Mulesoft being a true API Management solution provides the expected security via the Anypoint API Manager and a light web framework like SparkJava this will have to be bolted on. If your in a highly regulated industry or handle sensitive information I think the using a API Management solution is a must and if you don't then frameworks like Spark would be ok for APIs.

API Usage Metrics

Most product owners and development teams need to have insight into the usage of their applications/APIs. These metrics are a foundational element needed for managing the future of product.

Mulesoft:

Again Mulesoft is a true API Management solution and it captures metrics on every request to the API. Mulesoft provides real time dashboards and custom reports that can contain request, request size, response, response size, browser, ip, city, hardware, OS, etc... More details here - https://docs.mulesoft.com/analytics/viewing-api-analytics

SparkJava:

Using SparkJava you would need to dump the same metrics that an API Management tool captures out of the box to either a log or data stream. This will require additional coding for your application and the data virtualization platform for this.

API Metrics Conclusion

Depending on your environment you may or may not have access to a data analytics solution like Splunk or Elk that would really make Mulesoft's metrics collection a must have, but if you do then a solution like SparkJava should only take minor log4j entries to get the same types of data out to be consumed.

API Discovery and Reuse

API reuse and discover is central to not reinventing access to data and will speed up a delivery team's ability to release new features or whole new applications. Having one place to for all developers with proper security will allow faster adoption and keep data segregation. API Management solutions should have this is a part of the base product

Mulesoft:

Mulesoft Anypoint Platform has the Exchange where developers can upload RAML that defines the API and others are able to find the API and even run mock tests against the RAML specs. The Exchange even allows for for UI and backend API development once a RAML spec is created and uploaded. Engineers are able to find new APIs and even review current APIs to help others that come after them. More details about the exchange - https://www.mulesoft.com/exchange/SparkJava:

SparkJava being a thin web framework doesn't have the concept of an API definition and no API repository. This can be a strength and a weakness in the same respect depending on your use case and you could even use Mulesoft as an API reverse proxy with using RAML to define the end point and then mulesoft calling the SparkJava API under it.API Discovery and Reuse Conclusion

When building APIs having a central repository is key to help engineers find and reuse existing APIs. Mulesoft provides a really excellent product called Exchange where developers can share APIs and API fragments and it can even reference non Mulesoft APIs, but with limited functionality from the Mulesoft IDEs.